Cronbach’s alpha is one of the most widely used measures of internal consistency reliability in psychological and educational research. It helps researchers determine how well different items on a scale measure the same underlying construct. This guide will explain how to calculate and report Cronbach’s alpha in R, providing practical examples and interpretation guidelines.

Table of Contents

What is Cronbach’s Alpha?

Cronbach’s alpha is defined mathematically as:

\[ \alpha = \frac{k}{k-1}(1-\frac{\sum_{i=1}^k \sigma^2_i}{\sigma^2_t}) \]

📝 Simple Analogy: Think of Cronbach’s alpha like a choir singing in harmony:

- A high alpha (close to 1) is like a choir singing perfectly in harmony – all singers are consistently hitting their notes and contributing to the same song.

- A low alpha (close to 0) is like a choir where each singer is singing a different song – there’s no consistency or harmony between the parts.

In technical terms, the formula measures:

- k is the number of items (like the number of singers)

- σ²ᵢ is the variance of item i (how much each singer varies from their own note)

- σ²ₜ is the variance of the total score (how much the whole choir varies together)

The coefficient ranges from 0 to 1, with higher values indicating better internal consistency. Here’s how to interpret different ranges of Cronbach’s alpha:

| Cronbach’s Alpha Range | Interpretation |

|---|---|

| α ≥ 0.9 | Excellent internal consistency |

| 0.8 ≤ α < 0.9 | Good internal consistency |

| 0.7 ≤ α < 0.8 | Acceptable internal consistency |

| 0.6 ≤ α < 0.7 | Questionable internal consistency |

| 0.5 ≤ α < 0.6 | Poor internal consistency |

| α < 0.5 | Unacceptable internal consistency |

Example 1: Calculating Cronbach’s Alpha for a Simple Scale

Let’s start with a simple example using a hypothetical 5-item satisfaction survey:

# Install and load required package

install.packages("psych")

library(psych)

# Create sample data

satisfaction_data <- data.frame(

q1 = c(5, 4, 5, 4, 3, 2, 4, 5, 4, 3),

q2 = c(4, 4, 5, 3, 3, 2, 5, 4, 4, 3),

q3 = c(5, 3, 4, 4, 3, 1, 4, 5, 4, 2),

q4 = c(4, 4, 4, 3, 3, 2, 4, 4, 3, 3),

q5 = c(5, 4, 5, 4, 2, 2, 5, 5, 4, 3)

)

# Calculate Cronbach's alpha

alpha_result <- psych::alpha(satisfaction_data)

print(alpha_result$total)0.9515656 0.9621399 0.9705628 0.8355968 25.41304 0.02147595 3.68 0.9531235 0.8502968

Interpretation of Results

Let's break down these key reliability metrics:

- Raw alpha (0.95): This indicates excellent internal consistency. Being well above 0.90, it suggests that your scale items are very highly interrelated and measuring the same underlying construct.

- Standardized alpha (0.96): The standardized alpha is slightly higher than the raw alpha, indicating that standardizing the items marginally improves the scale's reliability. This suggests your items have somewhat different variances.

- Average inter-item correlation (0.84): This is quite high, as good inter-item correlations typically range from 0.30 to 0.50. The very high correlation (0.84) suggests your items might be somewhat redundant - they may be measuring the same aspect of the construct in different ways.

- Signal-to-Noise Ratio (S/N = 25.41): This is a very high S/N ratio, indicating that your scale has a strong "true score" component compared to error variance.

- Standard Error (ase = 0.02): The small standard error suggests high precision in the alpha estimate.

Practical Implications

While this scale shows excellent reliability (α > 0.95), it might be worth examining whether some items are redundant, as reliability this high sometimes indicates item redundancy. Consider:

- Looking at item-total correlations to identify potentially redundant items

- If scale length is a concern, you could potentially remove some items while maintaining excellent reliability

- For most practical purposes, an alpha between 0.80 and 0.90 is considered optimal

Example 2: Analyzing Multiple Subscales

Often, questionnaires contain multiple subscales - groups of related items that measure different aspects of a broader concept. For example, a job satisfaction survey might include separate subscales for satisfaction with pay (3 items about salary and benefits), work-life balance (4 items about hours and flexibility), and team dynamics (5 items about coworker relationships). Each subscale needs its own reliability analysis since they measure distinct constructs. Here's how to analyze them separately:

# Create sample data with two subscales

questionnaire_data <- data.frame(

# Satisfaction subscale

sat1 = c(5, 4, 5, 4, 3),

sat2 = c(4, 4, 5, 3, 3),

sat3 = c(5, 3, 4, 4, 3),

# Engagement subscale

eng1 = c(4, 5, 4, 3, 4),

eng2 = c(5, 4, 5, 4, 3),

eng3 = c(4, 5, 4, 3, 4)

)

# Calculate alpha for each subscale

satisfaction_alpha <- psych::alpha(questionnaire_data[,1:3])

engagement_alpha <- psych::alpha(questionnaire_data[,4:6])

# Print results

print("Satisfaction Subscale:")

print(satisfaction_alpha$total$raw_alpha)

print("Engagement Subscale:")

print(engagement_alpha$total$raw_alpha)[1] 0.8297872

[1] "Engagement Subscale:"

[1] 0.5555556

Interpretation of Results

Let's analyze the reliability of each subscale:

Satisfaction Subscale (α = 0.83)

- Shows good internal consistency (above 0.80 threshold)

- Suitable for research purposes

- No need for scale revision

Engagement Subscale (α = 0.56)

- Shows poor internal consistency (below 0.70 threshold)

- May need substantial revision

- Consider reviewing item wording and content

Practical Implications:

- The satisfaction items work well together as a scale

- The engagement items need improvement - consider:

- Reviewing item content for clarity

- Checking for potential reverse-coded items

- Adding more items to improve reliability

- Piloting revised items with a larger sample

This analysis shows that while the satisfaction subscale is ready for use, the engagement subscale needs significant revision before it can be considered a reliable measure.

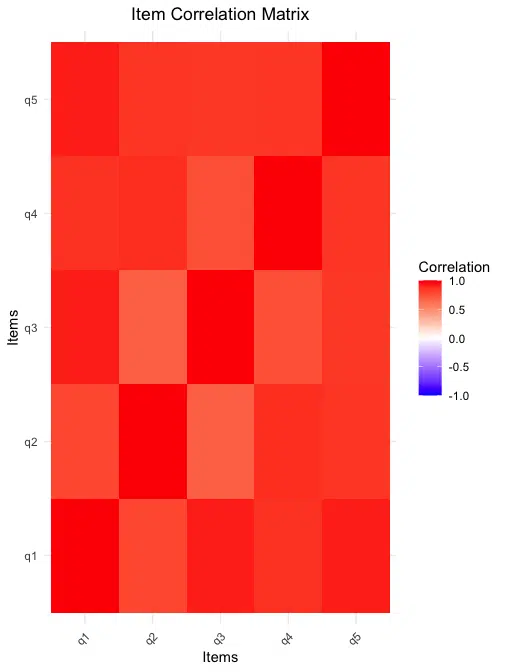

Visualizing Cronbach's Alpha Results

We can create a visualization to show the relationship between items:

library(ggplot2)

library(reshape2)

# Calculate correlation matrix

cor_matrix <- cor(satisfaction_data)

# Create correlation plot

ggplot(data = melt(cor_matrix), aes(x=Var1, y=Var2, fill=value)) +

geom_tile() +

scale_fill_gradient2(low="blue", high="red", mid="white",

midpoint=0, limit=c(-1,1), name="Correlation") +

theme_minimal() +

labs(title="Item Correlation Matrix",

x="Items", y="Items") +

theme(axis.text.x = element_text(angle = 45, hjust = 1))

Using R's psych Package

The psych package's alpha() function provides two essential analyses for understanding your scale's reliability. First, item.stats shows how well each question correlates with the total score, with higher correlations indicating better-performing items. Second, alpha.drop reveals how removing each item would affect the scale's overall reliability - if alpha increases when an item is removed, that item might not be measuring the same thing as the others. Together, these analyses help you determine if all your items are working well together to measure your intended construct.

# Get detailed item statistics

item_stats <- psych::alpha(satisfaction_data, check.keys=TRUE)

print(item_stats$item.stats)

# Calculate alpha if item deleted

print(item_stats$alpha.drop)This would output:

q1 10 0.9706550 0.9658048 0.9678151 0.9536370 3.9 0.9944289

q2 10 0.8896632 0.9020698 0.8753839 0.8341511 3.7 0.9486833

q3 10 0.9184289 0.9031589 0.8868781 0.8549750 3.5 1.2692955

q4 10 0.9136582 0.9302549 0.9108445 0.8835704 3.4 0.6992059

q5 10 0.9620367 0.9583185 0.9495901 0.9335527 3.9 1.1972190

raw_alpha std.alpha G6(smc) average_r S/N alpha se var.r med.r

q1 0.9256705 0.9443625 0.9411677 0.8092829 16.97347 0.03349471 0.005059260 0.8451709

q2 0.9459910 0.9605126 0.9610888 0.8587797 24.32453 0.02217352 0.004108443 0.8562074

q3 0.9486964 0.9602479 0.9603522 0.8579338 24.15589 0.02214440 0.001881694 0.8570131

q4 0.9489177 0.9535391 0.9579142 0.8368910 20.52349 0.02555552 0.007739668 0.8459767

q5 0.9293952 0.9463316 0.9559664 0.8150968 17.63294 0.03400948 0.007448663 0.8260166

When you run item statistics, you get these column headers:

- n: Number of valid responses

- raw.r: Raw correlation between item and total score

- std.r: Standardized item-total correlation

- r.cor: Correlation corrected for item overlap

- r.drop: Item-total correlation when item is dropped

- mean: Average score for the item

- sd: Standard deviation of the item

The alpha.drop statistics include:

- raw_alpha: Scale reliability if item is removed

- std.alpha: Standardized alpha if item is removed

- G6(smc): Guttman's lambda 6 reliability - often considered a better lower bound estimate than alpha

- average_r: Average inter-item correlation

- S/N: Signal-to-noise ratio

- alpha se: Standard error of alpha

- var.r: Variance of inter-item correlations

- med.r: Median inter-item correlation

Item Performance Analysis

Let's analyse the results:- Item Correlations:

- Q1 shows the highest correlation (r = 0.97)

- Q2 has the lowest correlation (r = 0.89)

- All items show very strong correlations (> 0.85)

- Item Means:

- Means range from 3.4 to 3.9

- Q1 and Q5 show the highest means (3.9)

- Q4 shows the lowest mean (3.4)

- Standard Deviations:

- Q3 shows the highest variability (SD = 1.27)

- Q4 shows the lowest variability (SD = 0.70)

Practical Implications

Item Retention Analysis:

- All items appear to be functioning well, as removing any item would not substantially improve the scale's reliability

- Removing Q1 or Q5 would cause the largest decrease in reliability, suggesting these are particularly strong items

- Q2 shows the lowest correlation but still performs adequately

Recommendations:

- Keep all items in their current form as the scale shows excellent reliability

- Consider Q1 and Q5 as anchor items for future scale development

- Monitor Q2's performance in future administrations

Interpreting Cronbach's Alpha Values

When reporting Cronbach's alpha in research, follow these guidelines:

- Report the overall alpha value for the complete scale

- Report alpha values for any subscales separately

- Include the number of items in each scale/subscale

- Report any problematic items identified through "alpha if item deleted"

Example write-up:

"The internal consistency reliability of the satisfaction scale was good (Cronbach's α = .89, n = 5 items). Analysis of subscales showed excellent reliability for the satisfaction subscale (α = .92, n = 3 items) and good reliability for the engagement subscale (α = .87, n = 3 items)."

Assumptions and Limitations

When using Cronbach's alpha, consider these important points:

- Tau-equivalence: The measure assumes all items contribute equally to the construct being measured.

- Unidimensionality: The scale should measure a single construct.

- Sample Size: Larger samples provide more stable estimates.

- Number of Items: Alpha tends to increase with more items, even without improved reliability.

Conclusion

Cronbach's alpha is a valuable tool for assessing scale reliability in R. The psych package makes it easy to calculate and report these statistics. Remember to:

- Check assumptions before analysis

- Report both overall and subscale reliability

- Consider item-level statistics

- Interpret results in context of your research

Have fun and happy researching!

Further Reading

To deepen your understanding of Cronbach's alpha and reliability analysis, here are some helpful online resources:

- Cronbach's Alpha Calculator - An online tool for quick reliability calculations

- The R Project for Statistical Computing - Download R for your analysis

- The psych package documentation - Complete guide to psychological statistics in R

Attribution and Citation

If you found this guide and tools helpful, feel free to link back to this page or cite it in your work!

Suf is a senior advisor in data science with deep expertise in Natural Language Processing, Complex Networks, and Anomaly Detection. Formerly a postdoctoral research fellow, he applied advanced physics techniques to tackle real-world, data-heavy industry challenges. Before that, he was a particle physicist at the ATLAS Experiment of the Large Hadron Collider. Now, he’s focused on bringing more fun and curiosity to the world of science and research online.