Machine learning is the science of programming a computer to learn from different data and perform inference. Yesteryear, machine learning tasks involved manual coding all of the algorithms and mathematical and statistical formulae. Nowadays, we have fantastic programming languages like Python, with its abundance of libraries, frameworks, and modules fine-tuned for data science and machine learning. The access to tools for statistical data analysis, numerical computations, visualization, web scraping, database handling, deep learning, and more have made Python one of the most popular programming languages in the machine learning and data science industries specifically and globally. This blog post will go over the top python libraries you need to make your data science and machine learning projects as effortless as possible. I will also suggest possible alternatives to these libraries and, in some cases, will draw comparisons. In other cases, you will find that libraries and frameworks complement each other and enhance your developing experience.

Table of contents

Check Python Version

Python’s libraries and packages have versioning and the latest versions may only be compatible with certain releases of Python. You can find more information on how to check the specific version of you are using from the command line or programmatically, go to my article called: “How to Check Python Version for Linux, Mac, and Windows“.

NumPy

NumPy (Numerical Python) is the essential package for numerical computation in Python. Numpy is the go-to library for working with n-dimensional arrays, scientific calculations, and mathematics; Numpy comes with sets of mathematical functions, including linear algebra and Fourier transform. The NumPy array or ndarray is significantly faster than traditional Python lists, making NumPy the preferred library for speed and efficiency of computation.

Features of Numpy

- High-performance N-dimensional array object with homogenous elements

- Contains tools for integrating C/C++ and Fortran code

- Linear algebra, Fourier transform and other mathematical operations on arrays

- Functions for finding elements in an array including where, nonzero and count_nonzero

Python is a fundamental Python library but is not installed automatically when you install Python. You can install NumPy on your system by following the steps in the article: “How to Solve Python ModuleNotFoundError: no module named ‘numpy’“.

Alternatives to Numpy

SymPy

SymPy stands for Symbolic Mathematics in Python. It is one of the core libraries of the SciPy ecosystem alongside NumPy, Pandas, and Matplotlib. SymPy enables manipulation of mathematical expressions and is used to solve advanced mathematical problems that require differentiation, integration, and linear algebra. SymPy aims to be an alternative to frameworks such as Mathematica or Maple while keeping the code as simple as possible and easily extensible.

Pandas

Pandas is the standard data science library for flexible and robust data analysis/manipulation. Pandas provides two data structures called Series and DataFrame; Series is similar to arrays. DataFrame is a collection of Series objects presented in a table, similar to other statistical software like Excel or SPSS. For a beginner’s tutorial on Pandas for data science, please click on our article “Introduction to Pandas: A Complete Tutorial for Beginners“.

Features of Pandas

- Fast and efficient DataFrame structure for default and customized indexing.

- Flexible loading of data into in-memory data objects from different file formats

- Handling of missing data

- Label-based slicing, indexing and subsetting of large data sets

- Group by data for aggregation and transformations

- Powerful merging and joining of data

Alternatives to Pandas

Dask

Dask is a library for parallel computing. Dask enables scalability of data science and machine learning workflows and is easily integrable with Numpy, pandas and scikit-learn. If you have larger-than-memory data, Dask can scale up your workflow to leverage all the cores on your local workstation or scale out to the cloud.

Modin

Modin uses Ray or Dask to provide an effortless way to speed up pandas notebooks, scripts and libraries. Introduces the modin-specific pandas DataFrame, which is a highly lightweight parallel DataFrame. Modin can provide speed-ups of up to 4x on a laptop with four physical cores.

Spark MLlib

Spark MLlib is an interface for Apache Spark in Python enables you to write Spark applications using Python APIs and a PySpark shell to analyze data in a distributed environment interactively. PySpark supports most of Spark’s features, including Spark SQL, DataFrame, Streaming, MLib (Machine Learning), and Spark Core. The pandas API allows pandas workload to be scaled out.

Scikit-Learn

Scikit-learn is an extremely valuable library for machine learning in Python. The library provides an extensive set of tools for machine learning and statistical modeling, including regression, clustering, classification, and dimensionality reduction. The library is built on SciPy (Scientific Python)

Features of Scikit-Learn

- Supervised learning algorithms including generalized linear models, discriminate analysis, naive Bayes, support vector machines and decision trees.

- Unsupervised learning algorithms for such as K-Means for grouping unlabeled data

- Similarity measure including Jaccard Similarity, Cosine Similarity, and Euclidean Distance

- Cross-validation for estimating the performance of supervised models on unseen data

- Manifold learning for summarizing and describing complex multi-dimensional data

- Feature selection for identifying meaningful attributes from data to create supervised models

- Ensemble learning, where multiple supervised models are combined for predictions

Alternatives to Scikit-Learn

Edward

Edward is a library for probabilistic modeling, inference and criticism. It provides a testbed for rapid experimentation and prototyping ranging from classical hierarchical models to complex deep probabilistic models. Edward fuses three fields: Bayesian statistics and machine learning, deep learning, and probabilistic programming. Edward is built on TensorFlow and enables computational graphs, distributed training, CPU/GPU integration, and visualization with TensorBoard.

Spark MLib

Spark MLlib provides a uniform set of high-level APIs that help users create and tune practical machine learning pipelines. MLib provides standard learning algorithms such as classification, regression, and clustering. MLib enables feature extraction, transformation, dimensionality reduction, and selection. Algorithms can be saved and loaded, and integrated into pipelines.

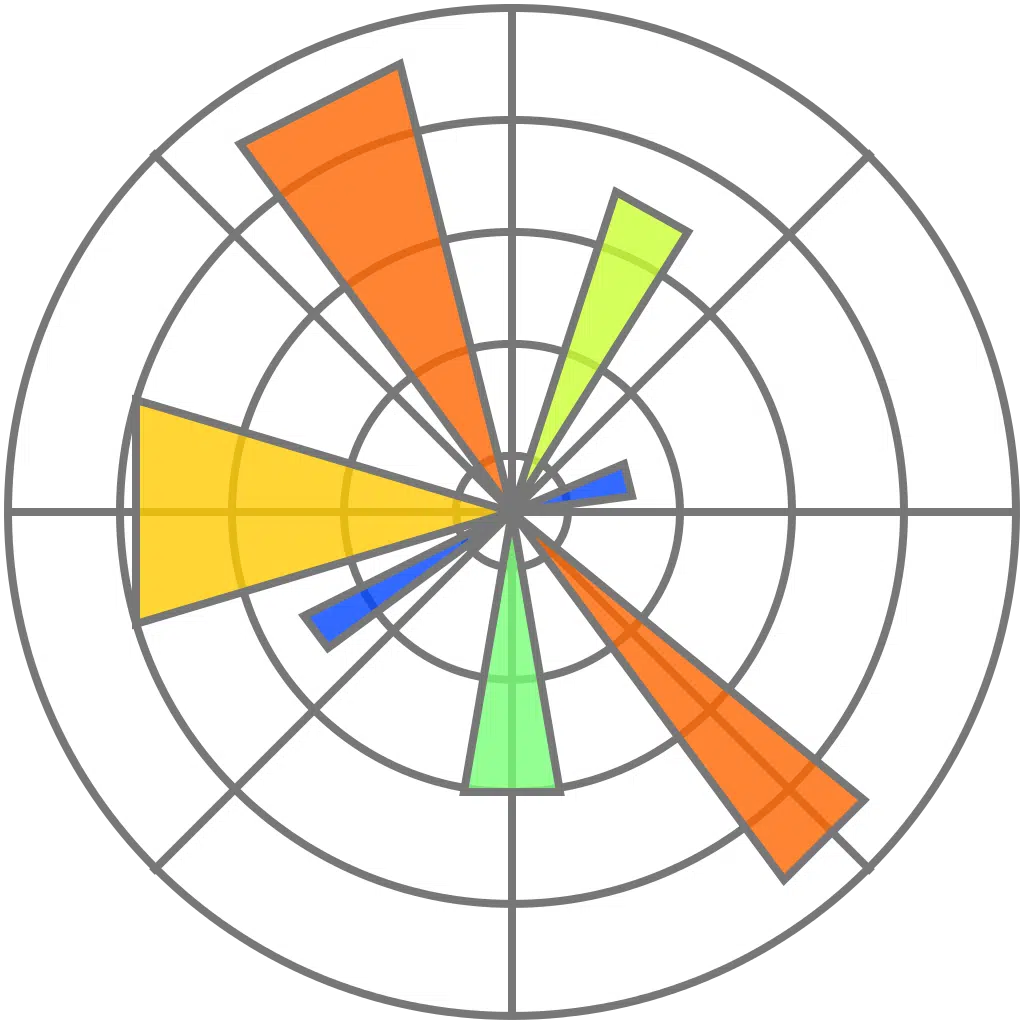

Matplotlib

Matplotlib is a 2D visualization library that produces high-quality figures in various hardcopy formats and interactive environments across platforms. Matplotlib’s usage includes python scripts, the Python and Python shell, web application servers and additional graphical user interface toolkits. Matplotlib

Features of Matplotlib

- Create publication-quality plots

- Make interactive figures that can zoom, pan, and update

- Extensive customization of visual style and layout

- Export to numerous file formats

- Embed in JupyterLab and Graphical User Interfaces

- Use third party packages for domain-specific visualization, including seaborn for statistical data visualization, Cartopy for geospatial data mapping, DNA Features Viewer to visualize DNA features, and WCSAxes for visualizing astronomical data.

Alternatives to Matplotlib

Seaborn

Seaborn is a data visualization library based on Matplotlib. Its plotting functions operate on DataFrames and arrays containing whole datasets, suitable for large amounts of data typically stored in a table or array.

ggplot

ggplot is a library for declaratively creating graphics based on the Grammar of Graphics.

plotly

plotly is an interactive, browser-based graphing library for Python. Through plotly, you have access to over 30 chart types, including scientific charts, 3D graphs, statistical charts, SVG maps, financial charts, and more. Plotly is also integrable with JupyterLab.

Scipy

SciPy is a library for mathematics, science, and engineering that includes statistics, optimization, integration, linear algebra, Fourier transforms, sigmoid functions, and more. The SciPy library depends on the fast N-dimensional array provided by NumPy.

Features of Scipy

- Modules for the following tasks: optimization, linear algebra, integration, interpolation, special functions, FFT, signal and image processing, and ODE solvers.

- High-level functionality for visualization and data manipulation

- Adaptable to parallel programming

Alternatives to Scipy

Julia

Julia is a high-level, high-performance, dynamic, and general-purpose programming language used chiefly for numerical analysis. It is just-in-time compiled and can match the speed of C. It can call Python, C, and Fortran libraries. Julia enables parallelization to a higher degree than in Python. Julia has a host of statistical packages, including JuMP for mathematical optimization, Turing for sampling-based inference methods for solving problems across Bayesian statistics and probabilistic machine learning, and HypothesisTest, which provides a wide range of hypothesis tests in pure Julia. Julia also includes machine learning packages, big data and parallel computing capabilities, geospatial and oceanography related packages, and mathematical packages.

Keras

Keras is a bare-bones, easy to learn, and highly modular neural network and deep learning framework. Keras is a high-level neural network API capable of running on top of either TensorFlow, Theano, or CNTK. Its primary purpose is to enable rapid experimentation.

Features of Keras

- Easy and rapid prototyping

- Running seamlessly on CPU and GPU

- Supports a wide array of network types including convolutional neural networks, recurrent networks, and generative adversarial networks.

- It supports arbitrary network architectures including multi-input and multi-output models

Alternatives to Keras

PyTorch

PyTorch is a deep learning framework that expresses models in idiomatic Python. PyTorch supports dynamic computation graphs, enabling you to change how the network behaves on the fly, unlike static graphs used in frameworks such as TensorFlow.

DeepPy

DeepPy is a deep learning framework that allows for Pythonic programming based on NumPy’s ndarray. DeepPy implements feedforward networks, convolutional neural networks, siamese networks, and autoencoders. DeepPy is runnable on CPU or Nvidia GPUs utilizing CUDArrays. It is a less mature project than other libraries and should be considered a work in progress.

Theano

Theano is a library for fast numerical computations on a CPU or GPU. Theano takes data structures and transforms them into runnable code that uses Numpy, efficient libraries like BLAS and native code (C++) to run as fast as possible on CPUs or GPUs. It uses a host of code optimizations to maximize performance from the hardware. Although Theano is a library for scientific computing, it has been available since 2007 and is particularly suited to deep learning due to its tensor operations and adaptability to GPUs.

Tensorflow

TensorFlow is a machine learning framework created by Google to design, build, train, and deploy deep learning models. Computations are done with data flow graphs; nodes on a graph represent mathematical operations, while the edges represent the data, usually in multidimensional data arrays or tensors. Hence, neural networks’ procedures on multifaceted data arrays or tensors are the flow of tensors.

Features of Tensorflow

- Visualization of neural networks as graphs

- Runs on CPU and GPU

- Parallel Neural network training, where multiple neural networks can be trained in parallel using GPUs

- Visualization of training loss and accuracy distributions, event logging, and event summaries using TensorBoard

TensorFlow vs PyTorch

Both PyTorch and TensorFlow operate on tensors and view any network as a directed acyclic graph, but they differ significantly in how they are defined. TensorFlow defines graphs statically before a model can run; PyTorch enables the changing and execution of nodes on the fly with no unique session interface or placeholders. PyTorch is more Pythonic, whereas TensorFlow can feel more obscure and layered. PyTorch debugging is more flexible because computation graphs are defined at runtime. You can use debugging tools such as pdb, ipdb, and PyCharm debugger. With TensorFlow, there is only the option of tfdbg, which allows you to evaluate TensorFlow expressions at runtime and browse all tensors and operations within the session scope.

Tensorflow vs Theano

Theano is a fully Python-based library, whereas TensorFlow is a hybrid C++/Python library. Having the combination of C++ and Python can be seen as a benefit for developers. Theano performs tasks faster than TensorFlow, especially the single GPU tasks, but TensorFlow takes the lead with multi-GPU tasks. With Theano, you have complete control over Optimizers as they have to be hard-coded. Theano integrates well with Keras like Tensorflow does but also other high-level wrappers like Lasagne. TensorFlow is the more popular library and has more extensive documentation, applications, and community support.

spaCy

spaCy is a free, open-source library for advanced Natural Language Processing (NLP) in Python. spaCy is designed for production use and helps users build applications for NLP and natural language understanding (NLU) of large volumes of text. These applications include information extraction, NLU systems, and pre-processing text for deep learning.

Features of spaCy

- Preprocessing: tokenisation, sentence segmentation, lemmatisation, stopwords

- Linguistic features: part of speech tags, dependency parsing, named entity recogntion

- Visualization of dependency trees and named entity recognition

- Pre-trained word embeddings

- Transfer learning capabilities using BERT-style pre-training

- Provides built-in word vectors

- Processes objects; more object-oriented compared to other libraries

Alternatives to spaCy

spaCy vs NLTK

NLTK is a string processing library, which takes strings as input and returns strings or lists of strings as output. On the other hand, spaCy uses the object-oriented approach. When you parse text, spaCy returns a document object, where the words and sentences are objects. In word tokenization and POS-tagging, spaCy performs better. However, NLTK outperforms spaCy in sentence tokenization. Based on its object-oriented nature, spaCy is more suited for the production environment and is a service rather than a tool. spaCy does not support many languages, here are models for only seven languages, whereas NLTK supports many more languages.

spaCy vs Gensim

Gensim is a Python library for topic modelling, document indexing and similarity retrieval with large corpora. Gensim works with large datasets and processes data streams. Gensim provides TF-IDF vectorization, word2vec, document2vec, latent semantic analysis, and latent Dirichlet allocation. Gensim is primarily designed for unsupervised text modeling and does not have enough functionality to provide an entire NLP pipeline. Preferably, it would be best if you combined Gensim with other libraries like Spacy or NLTK.

Scrapy

Scrapy is a framework for large scale web scraping. The framework provides all the tools needed for efficient data extraction from websites, processing and storage in preferred formats. Scrapy uses spiders, which are self-contained crawlers given a set of instructions to extract data from web pages. Scrapy is suited for giant jobs and uses Twister, which works asynchronously for concurrency.

Features of Scrapy

- Generates feed exports in popular formats such as JSON, CSV, and XML

- Built-in support for selecting and extracting data from sources using either XPath or CSS expressions

- Requests are scheduled and processed asynchronously

- Built-in service called Scrapyd, which allows uploading of projects and control spiders

- Decode JSON directly from websites that provide JSON data

- Selectors allow you to select particular data from a webpage like a heading, and uses lxml for parsing, which is extremely fast.

Alternatives to Scrapy

Scrapy vs BeautifulSoup

Scrapy is a complete framework designed to build spiders to scrape web pages. BeautifulSoup is a parsing library utility to get specific elements out of a web page, for example, a list of images. BeautifulSoup is very easy to learn, and you can quickly use it to extract the data you want. It is recommended to use the library in combination with Requests to download the HTML source code.

Scrapy vs PySpider

PySpider is a powerful web crawler system in Python. It provides a UI and distributed architecture with components like scheduler, fetcher, and processor. It supports integration with various databases, including MongoDB and MySQL for data storage. PySpider can use RabbitMQ, Beanstalk, Redis, and Kombu as the message queue. PySpider overall is more of a service than a framework. Scrapy is more popular, has a healthier community and an abundance of resources. Scrapy also provides a cloud environment to run scrapers.

Scrapy vs requests-HTML

requests-HTML is an HTML parser library that lets you use CSS and XPath selectors to extract the information you want from a webpage. It is more suited to smaller-scale tasks compared to Scrapy. requests-HTML is used in combination with BeautifulSoup to parse the HTML source code.

Scrapy vs Selenium

Selenium is a framework to automate testing for web applications. The API is very beginner-friendly, Pythonic and is used to develop web spiders. Both Selenium and Scrapy can scrape JavaScript, which is most commonly used for webpage building. Selenium is easier to use than Scrapy for JavaScript data extraction. Selenium is slower than Scrapy when crawling because it controls the browser to visit all files to render the page. Therefore Scrapy is suited to larger website scraping tasks. Scrapy is more extensible, and you can quickly develop custom middleware or pipelines to add custom functionality.

OpenCV

OpenCV is a massive open-source library for computer vision and image processing. The library helps process images and videos for object detection, facial and handwriting recognition, and more.

Features of OpenCV

- Read and write images

- Capture and save videos

- Process images (filter, transform)

- Feature detection

- Video analysis, for example, motion estimation, background subtraction, and object tracking

Alternatives to OpenCV

OpenCV vs Scikit-image

Scikit-image is described as “Image processing in Python” and is a collection of algorithms for image processing. It is written in Python, whereas OpenCV has C++, C, Python, and Java interfaces. Scikit-image provides I/O, filtering, morphology, transformations, annotation, color conversions, object detection, facial recognition and more. OpenCV is more suited to server-based notebooks like Google Colab or notebook extensions in the cloud, for example, Google or Azure cloud. Scikit-image works very well with JupyterLab notebooks as it is not as heavy-duty as OpenCV.

Statsmodels

statsmodels is a Python module that provides classes and functions for estimating many different statistical models and conducting statistical tests and statistical data exploration.

Features of Statsmodels

- Contains advanced functions for statistical testing and modeling not found in numerical libraries like NumPy or SciPy.

- Linear regression

- Logistic regression

- Time series analysis

- Method for statistical analysis is more aligned with the R programming language, making it a suitable library for data scientists already familiar with R and want to transition to Python.

- Works with Pandas DataFrames.

Alternatives to Statsmodels

Statsmodels vs Pandas

Pandas is primarily a package to handle and operate directly on data. statsmodels is mainly for traditional statistics and econometrics, with a much stronger emphasis on parameter estimation and statistical testing. statsmodels has Pandas as a dependency, whereas Pandas optionally uses statsmodels for statistical analysis.

Statsmodels vs Scipy.stats

Statsmodels has scipy.stats as a dependency and is created more like a static library similar to NumPy and SciPy. statsmodels provides a complete statistical framework similar to R. Scipy.stats has a large number of distributions and most of the standard parametric and nonparametric statistical tests. statsmodels is more focused on estimating statistical models.

Flask

Flask is a web application framework representing a collection of libraries and modules that enable web application developers to write applications without handling protocol, thread management, etc. Flask is a microframework. Flask’s design keeps the core of the application flexible, simple, and scalable.

Features of Flask

- Development server and debugging tools

- Integrated support for unit testing

- RESTful request dispatching

- Uses the template engine Jinja

- Support for secure cookies (client side sessions)

- Compatible with Google App Engine

Alternatives to Flask

Flask vs Django

Django is a web framework that facilitates rapid development. Django uses the mode-template-view (MTV) design pattern. It ships with many tools for application developers, such as an Object-relational mapping (ORM) framework for creating virtual object databases, administration panels, directory structures and more. Flask does not come with a built-in ORM framework. Developers can use existing libraries or extensions such as Flask-SQLAlchemy or Flask-Pony. Django does not come with REST support built-in, whereas Flask does. Django does have REST development supported by the Django REST framework project. Flask uses Jinja2 out of the box; Django uses its templating engine but can use Jinja2.

Flask vs FastAPI

FastAPI works similar to Flask in that it supports the deployment of web applications with a minimal amount of code. FastAPI is faster than Flask as it is built on the Asynchronous Server Gateway Interface (ASGI), which supports concurrency / asynchronous code. Flask uses the Python Web Server Gateway Interface (WSGI), which does not support asynchronous tasks. When you deploy a FastAPI framework, it will generate documentation and create an interactive Swagger GUI, which allows you to test the API endpoints more conveniently.

Flask vs Tornado

Tornado serves as a web framework and also an asynchronous networking library. By using non-blocking network I/O, Tornado can scale to tens of thousands of open connections, making it ideal for long polling, WebSockets, and other web applications that need a long-lived connection to each user. Tornado is perfect for use cases that are I/O intensive, for example, proxies, but not necessarily for compute-intensive cases. Flask provides REST support via extensions such as Flask-RESTful. Tornado does not have built-in support for REST API, but users can implement REST APIs manually.

How to Install Packages in Python

There are several ways to install packages in Python. I will briefly outline the three most common ways.

Installing Packages using Pip

Firstly, ensure you have pip installed. Then from your command line enter

pip install package-name

You are replacing “package-name” with the name of the package you want to install.

Installing Packages using Conda

Once you have set up your conda virtual environment, you can install packages from the command line by entering:

conda install package-name

You are replacing “package-name” with the name of the package you want to install.

Installing Packages that Cannot Be Installed With Pip

- Download the package and extract it into a local directory.

- If the package comes with its own set of installation instructions, they should be followed. Otherwise the package should come with a setup.py file, which you can use to install the package by opening a terminal, changing into the root directory where setup.py is located and enter into the command line:

python setup.py install

Summary

Python is a fantastic programming language that caters to the various niches of data science and machine learning. The abundance of libraries and packages will allow you to expand the capabilities of your programs and algorithms. Python’s libraries serve as a rich and synergistic ecosystem, with an active support system and developer community. You now know what libraries are out there and which libraries are suited to your tasks. You also have space to discover new libraries and frameworks that may bring something unique that you need. The choice is yours! If you want to learn more about why Python is such a good fit for machine learning, you can go to my post titled “Why Python is Ideal for Machine Learning“. Suppose you want more practical guidance on learning Python for data science and machine learning. In that case, you can visit my online courses page for Python-specific data science and machine learning courses. Stay tuned for more blog posts, and have fun researching!

Suf is a senior advisor in data science with deep expertise in Natural Language Processing, Complex Networks, and Anomaly Detection. Formerly a postdoctoral research fellow, he applied advanced physics techniques to tackle real-world, data-heavy industry challenges. Before that, he was a particle physicist at the ATLAS Experiment of the Large Hadron Collider. Now, he’s focused on bringing more fun and curiosity to the world of science and research online.