Introduction

ReLU (Rectified Linear Unit) revolutionized deep learning with its simplicity and efficiency, becoming the go-to activation function for neural networks. Defined as f(x) = max(0, x), ReLU activates only positive inputs, solving issues like vanishing gradients and enabling sparse, high-performance models. Let’s explore its PyTorch implementation and uncover why this powerhouse function is essential for modern AI.

Table of Contents

- Introduction

- What is ReLU?

- Visualizing ReLU

- Why Use ReLU?

- Comparison with Other Activation Functions

- Implementation in PyTorch

- Best Practices and Tips

- Common Issues and Solutions

- Vanishing Gradient Problem and How ReLU Resolves It

- Advanced Topics (ReLU Variants, Gradient Based Improvements, ReLU in CNNs)

- Summary

- Further Reading

What is ReLU?

Mathematical Definition

ReLU is defined mathematically as:

f(x) = max(0, x)💡Key Concept: This piecewise linear function has two key properties:

- For x ≤ 0: Output is 0

- For x > 0: Output is equal to x

Geometric Interpretation

ReLU creates a “hinge” at x=0, allowing the function to:

- Act as a linear function for positive inputs

- Completely block negative inputs

- Create sparse representations in neural networks

Visualizing ReLU

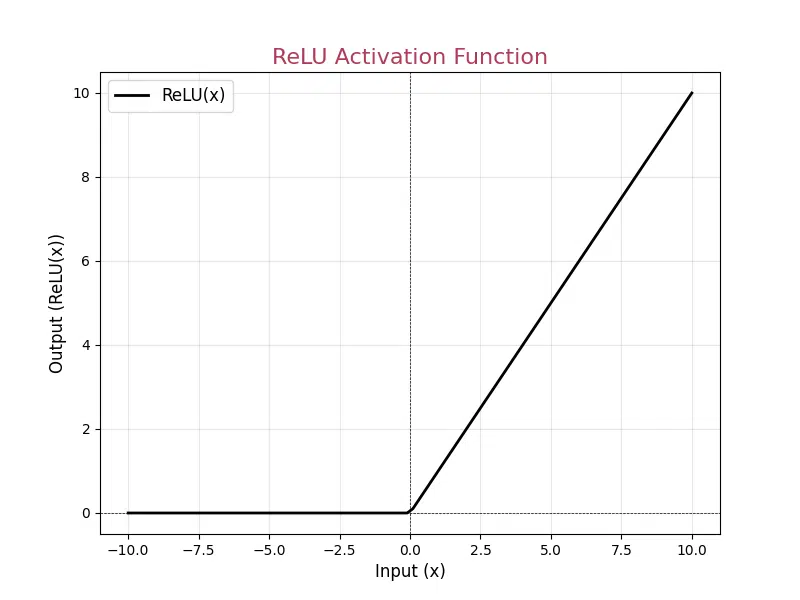

To better understand the behavior of the ReLU activation function, we can plot it over a range of input values using PyTorch and Matplotlib. This visualization demonstrates how the function outputs 0 for negative inputs and linearly increases for positive inputs.

import torch

import matplotlib.pyplot as plt

# Define the input range

x = torch.linspace(-10, 10, 100) # 100 points between -10 and 10

# Apply the ReLU function

relu = torch.nn.ReLU()

y = relu(x)

# Plot the ReLU function

plt.figure(figsize=(8, 6))

plt.plot(x.numpy(), y.numpy(), label="ReLU(x)", color="k", linewidth=2)

plt.axhline(0, color='black', linewidth=0.5, linestyle="--") # Add x-axis

plt.axvline(0, color='black', linewidth=0.5, linestyle="--") # Add y-axis

plt.title("ReLU Activation Function", fontsize=16, color="#b03b5a")

plt.xlabel("Input (x)", fontsize=12)

plt.ylabel("Output (ReLU(x))", fontsize=12)

plt.grid(alpha=0.3)

plt.legend(fontsize=12)

plt.show()The output of this code is a graph of the ReLU function:

💡 Key Observations

- The ReLU function outputs

0for all inputs less than or equal to0. - For positive inputs, the output is identical to the input, represented as a straight line with a slope of 1.

This behavior makes ReLU particularly effective for neural networks, introducing non-linearity without vanishing gradients for positive values.

Why Use ReLU?

ReLU’s popularity in deep learning stems from its ability to address critical challenges faced by earlier activation functions like Sigmoid and Tanh. By providing simplicity, efficiency, and effective gradient flow, ReLU has become the default choice for building robust neural networks. In this section, we delve into the key reasons behind ReLU’s widespread use and explore its advantages and challenges.

Key Advantages

- Computational Efficiency:

- ReLU is computationally simpler compared to other activation functions like Sigmoid or Tanh, which require exponential calculations.

- It performs a straightforward comparison operation to return the maximum value between 0 and the input, making it ideal for large-scale networks.

- The simplicity of the function enables faster forward propagation during inference and efficient backward propagation for gradient computations.

- Biological Plausibility:

- The behavior of ReLU is inspired by biological neurons, which only fire when the input signal surpasses a certain threshold.

- Its one-sided activation—outputting 0 for negative inputs and a positive linear response otherwise—mimics the neural firing mechanism.

- This biological analogy makes ReLU an intuitive choice for neural networks and supports its widespread adoption in deep learning.

- Training Benefits:

- ReLU effectively addresses the vanishing gradient problem commonly encountered with Sigmoid or Tanh, particularly in deep networks.

- The function’s ability to output 0 for negative inputs results in sparse activation, where only a subset of neurons are active. This improves computational efficiency and reduces interdependence among neurons.

- Sparse activation can lead to better generalization as it reduces overfitting by forcing the network to learn more robust features.

- ReLU also accelerates convergence during training due to its linear nature for positive inputs, which allows gradients to flow unimpeded.

Limitations and Challenges

- Dying ReLU Problem:

- ReLU outputs 0 for all negative inputs, and during training, some neurons may end up with weights that cause their inputs to always be negative.

- These “dead” neurons contribute nothing to the model, reducing its capacity to learn and adapt to the data.

- This issue is especially prevalent with high learning rates or improper weight initialization.

- Solutions include using variants like Leaky ReLU or PReLU, which allow small non-zero gradients for negative inputs.

- Non-zero Centered:

- The ReLU function is not zero-centered, meaning the output is always either zero or a positive value.

- This lack of symmetry can cause the gradient updates to be disproportionately distributed across dimensions during optimization.

- The result is zig-zagging dynamics during gradient descent, which can slow convergence.

- Using Batch Normalization before applying ReLU can mitigate this issue by normalizing the inputs.

- Unbounded Activation:

- For positive inputs, ReLU produces outputs that can grow arbitrarily large, leading to potential gradient explosion during training.

- This unbounded nature may require careful weight initialization and learning rate tuning to ensure stable training.

- Gradient clipping and advanced optimizers like Adam can help manage this issue effectively.

- Variants like ReLU6, which clamps the output to a maximum value (e.g., 6), are sometimes used in mobile and embedded systems to address this limitation.

Comparison with Other Activation Functions

Activation functions play a critical role in neural networks by introducing non-linearity, enabling the model to learn complex patterns and relationships in data. While ReLU is one of the most widely used activation functions due to its simplicity and efficiency, other activation functions are also valuable for specific tasks and architectures.

The table below highlights key differences between ReLU and other popular activation functions:

| Activation Function | Equation | Advantages | Disadvantages | Use Cases |

|---|---|---|---|---|

| ReLU | f(x) = max(0, x) |

|

|

Default choice for hidden layers in deep networks and CNNs |

| Leaky ReLU | f(x) = max(0.01x, x) |

|

|

Suitable for deeper networks with risk of dying neurons |

| Sigmoid | f(x) = 1 / (1 + e^(-x)) |

|

|

Output layers in binary classification |

| Tanh | f(x) = (e^x - e^(-x)) / (e^x + e^(-x)) |

|

|

Hidden layers in shallow networks |

| Swish | f(x) = x * sigmoid(x) |

|

|

Advanced architectures like transformers |

💡Choosing the right activation function depends on the specific requirements of your task:

- ReLU: The default choice for most networks due to its speed and simplicity.

- Leaky ReLU: A better option when the risk of dying neurons is high.

- Sigmoid and Tanh: Suitable for shallow networks or output layers in binary classification and regression tasks.

- Swish and GELU: Modern activation functions designed for deep architectures and cutting-edge applications.

By understanding the strengths and limitations of these activation functions, you can optimize your neural network’s performance and stability for specific use cases.

Implementation in PyTorch

ReLU is a core component of PyTorch and can be easily implemented using built-in modules and functions. PyTorch provides flexibility in applying ReLU, whether you’re working with simple tensors or building complex neural networks.

💡There are two main ways to use ReLU in PyTorch:

- Using the

torch.nn.ReLUmodule: This method creates a ReLU object that you can reuse in your code. It’s perfect when building neural networks because you can add it as a layer, making your model structure cleaner and easier to manage. - Using the functional interface

torch.nn.functional.relu: This is a direct way to apply ReLU to data without creating an object. It’s great for quick computations or when you need to apply ReLU only once in a specific operation.

Below is an example demonstrating both approaches:

Basic Usage

import torch

import torch.nn as nn

# Method 1: Using nn.ReLU module

relu = nn.ReLU()

x = torch.tensor([[-1.0, 2.0, -3.0], [0.0, 4.0, -5.0]])

output = relu(x)

print("Input:")

print(x)

print("\nOutput:")

print(output)

# Method 2: Using functional interface

import torch.nn.functional as F

output_functional = F.relu(x)

print("\nOutput using functional interface:")

print(output_functional)In this example:

- The input tensor

xcontains both positive and negative values, making it ideal for showcasing ReLU’s behavior. - Using

torch.nn.ReLU, the module processes the tensor and replaces all negative values with0, leaving positive values unchanged. - The functional interface

torch.nn.functional.reluachieves the same result but does not require explicitly creating a module instance.

This flexibility allows developers to choose the method that best suits their workflow, whether it’s quick experimentation or structuring a full neural network pipeline.

In Neural Networks

ReLU is a common choice for activation functions in neural networks due to its simplicity and efficiency. When building a neural network, ReLU is typically applied after a linear layer to introduce non-linearity, allowing the model to learn complex patterns in the data.

Below is an example of integrating ReLU into a simple feedforward neural network:

import torch

import torch.nn as nn # Import torch.nn and assign the alias 'nn'

# Set random seed for reproducibility

torch.manual_seed(42)

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.layer1 = nn.Linear(10, 20)

self.relu = nn.ReLU()

self.layer2 = nn.Linear(20, 1)

def forward(self, x):

x = self.layer1(x)

x = self.relu(x)

x = self.layer2(x)

return x

# Create and use the model

model = SimpleNet()

input_data = torch.randn(32, 10) # batch_size=32, input_features=10

output = model(input_data)In this example:

- Layer 1: A linear transformation is applied to the input data, mapping it from 10 features to 20 features.

- ReLU Activation: The output from the first layer is passed through the ReLU activation function, ensuring that all negative values are set to 0 while positive values remain unchanged.

- Layer 2: The transformed data is passed to another linear layer, reducing it to a single output feature, which might represent a regression output or a probability score.

This structure showcases how ReLU can be seamlessly integrated into a neural network pipeline, providing the non-linearity required for the model to capture complex relationships in the data.

Custom Implementation

While PyTorch provides built-in ReLU implementations, creating a custom ReLU function can be useful in scenarios where you need to modify its behavior or integrate additional logic. Below is an example of implementing a custom ReLU module from scratch:

import torch

import torch.nn as nn # Import torch.nn and assign the alias 'nn'

# Set random seed for reproducibility

torch.manual_seed(42)

class CustomReLU(nn.Module):

def __init__(self, inplace=False):

super(CustomReLU, self).__init__()

self.inplace = inplace

def forward(self, x):

return x.clamp(min=0) if self.inplace else torch.clamp(x, min=0)

# Usage example

custom_relu = CustomReLU()

x = torch.randn(5)

output = custom_relu(x)

print("Input:", x)

print("Output:", output)In this custom implementation:

- CustomReLU Class: Inherits from

torch.nn.Module, making it compatible with other PyTorch components and allowing it to be integrated into neural networks seamlessly. - In-place Option: The

inplaceparameter determines whether the input tensor should be modified directly to save memory (ifinplace=True) or if a new tensor should be created (default behavior). - Logic: The function uses

torch.clamp()to replace all negative values with 0, mimicking the behavior of the ReLU activation function.

Output: tensor([0.3367, 0.1288, 0.2345, 0.2303, 0.0000])

- The custom ReLU function processes each element of the input tensor:

- Values greater than

0are left unchanged (e.g.,0.3367,0.1288). - Negative values are replaced with

0(e.g.,-1.1229becomes0.0000).

- Values greater than

- This behavior aligns with the definition of the ReLU function, which is

f(x) = max(0, x).

💡Usage Tip: The example demonstrates how to apply the custom ReLU function to a random tensor. It behaves like the built-in ReLU while offering flexibility for features such as logging activations, adding thresholds, handling specific inputs, or including learnable parameters—ideal for debugging or experimentation.

Best Practices and Tips

Initialization

❗Proper initialization of weights and biases is crucial when using ReLU to ensure stable training and avoid issues like dying neurons:

- Use He Initialization:

He initialization (also known as Kaiming initialization) is specifically designed for activation functions like ReLU. It sets weights to values drawn from a distribution with variance inversely proportional to the number of input neurons, preventing gradients from exploding or vanishing.

- Bias Initialization:

Biases should be initialized to small positive values (e.g.,

0.01) to prevent neurons from being stuck at zero output, especially during the initial training phase. - Use Batch Normalization:

Batch normalization helps stabilize the distribution of inputs to ReLU, reducing sensitivity to initialization and improving training efficiency. By normalizing inputs, it mitigates the problem of dying neurons and helps the network converge faster.

Training

❗During training, careful monitoring and parameter selection can help maximize the effectiveness of ReLU:

- Monitor Activation Statistics:

Regularly inspect the range and distribution of activations during training. Sparse or inactive neurons may indicate potential issues like dying ReLU or poor initialization.

- Appropriate Learning Rates:

High learning rates can cause large weight updates, pushing inputs to ReLU into the negative range and leading to dying neurons. Start with a conservative learning rate and use techniques like learning rate schedules to refine it over time.

- Use Leaky ReLU for Deep Networks:

For very deep networks, where the risk of dying neurons is higher, consider using Leaky ReLU as a substitute. It introduces a small slope for negative values, allowing gradients to flow even when inputs are less than zero.

# Example of proper initialization

def init_weights(m):

if isinstance(m, nn.Linear):

# Apply He initialization for weights

torch.nn.init.kaiming_normal_(m.weight, nonlinearity='relu')

# Initialize biases to a small positive value

m.bias.data.fill_(0.01)

# Apply the initialization to all layers in the model

model.apply(init_weights)In this example, init_weights() ensures that all Linear layers in the model use He initialization for weights and small positive values for biases. Applying this method consistently across the network prevents gradient-related issues and ensures smooth training.

💡 Key Takeaways:

- He initialization optimizes weight variance for ReLU, ensuring effective gradient flow.

- Small positive biases avoid zero outputs, particularly at the start of training.

- Batch normalization further stabilizes inputs to ReLU, improving overall performance.

Common Issues and Solutions

Dying ReLU Problem

❗The “Dying ReLU” problem occurs when neurons consistently output zero for all inputs, effectively becoming inactive during training. This typically happens when weights are updated in a way that forces the inputs to ReLU into the negative range permanently. As a result, these neurons stop contributing to the learning process.

Solutions:

- Using Leaky ReLU or PReLU:

Leaky ReLU allows a small negative slope for inputs less than zero, ensuring that gradients continue to flow even for negative inputs. Parametric ReLU (PReLU) takes this further by learning the slope during training.

- Proper Initialization:

He initialization is specifically designed for ReLU and its variants. By setting weights with appropriate variance, it reduces the chances of neurons getting stuck in the zero output state.

- Careful Learning Rate Selection:

Excessively high learning rates can cause weights to fluctuate too widely, pushing neuron inputs into negative ranges where ReLU outputs zero. Gradually adjusting the learning rate or using adaptive optimizers like Adam can help mitigate this issue.

Gradient Explosion

❗ReLU outputs are unbounded for positive inputs, which means gradients can become extremely large during backpropagation. This can destabilize training, particularly in deep networks, where such effects compound over multiple layers.

Solutions:

- Gradient Clipping:

This technique sets a threshold for gradients during backpropagation, preventing them from exceeding a specified limit. By controlling the gradient magnitude, you can ensure stable training even when gradients attempt to explode.

- Batch Normalization:

Batch normalization normalizes inputs to ReLU, ensuring consistent activation ranges and reducing the risk of large gradients. It also helps the network converge faster by stabilizing weight updates.

- Proper Weight Initialization:

Using He initialization ensures that weights are scaled appropriately, keeping the output activations within a manageable range and preventing gradients from becoming excessively large.

Addressing these common issues proactively ensures smoother training and better performance for models using ReLU as their activation function.

Vanishing Gradient Problem and How ReLU Resolves It

❗What is the Vanishing Gradient Problem?

The vanishing gradient problem occurs during the training of deep neural networks when gradients of the loss function become extremely small as they are backpropagated through the network. This makes weight updates negligible, leading to stagnation in training and preventing the network from learning effectively.

This issue is particularly common with activation functions like Sigmoid and Tanh, which squash their inputs into a small range (e.g., [0, 1] for Sigmoid or [-1, 1] for Tanh). Gradients in these ranges become very small, especially for deeper layers.

Why Does It Happen?

- Activation Function Behavior: Functions like Sigmoid and Tanh flatten out for large positive or negative inputs, resulting in gradients close to zero.

- Deep Networks: As gradients are backpropagated through layers, they are repeatedly multiplied by the derivative of the activation function, leading to exponential shrinking in deep networks.

- Weight Initialization: Poor initialization can amplify the vanishing gradient problem by pushing activations into saturation regions.

How Does ReLU Resolve It?

ReLU addresses the vanishing gradient problem with its linear behavior for positive inputs. The derivative of ReLU is 1 for inputs greater than zero and 0 for inputs less than or equal to zero, ensuring non-zero gradients for active neurons. This makes gradient flow more efficient, particularly in deep networks.

💡 Key Advantages of ReLU in Preventing Vanishing Gradients:

- Non-Saturating Gradient: For positive inputs, ReLU’s gradient is constant, allowing gradients to propagate without diminishing.

- Sparsity: By setting negative inputs to zero, ReLU promotes sparse activations, reducing computational overhead.

- Efficient Gradient Flow: The piecewise linear behavior ensures gradients remain significant during backpropagation.

Python Example

Let’s compare the gradient flow of Sigmoid and ReLU in a simple neural network:

import torch

import torch.nn as nn

torch.manual_seed(42)

# Define input and weights

x = torch.tensor([[0.5, -0.3]], requires_grad=True)

weights = torch.tensor([[0.1, 0.2], [-0.1, 0.3]], requires_grad=True)

# Sigmoid activation

sigmoid = nn.Sigmoid()

output_sigmoid = sigmoid(torch.matmul(x, weights))

output_sigmoid.sum().backward()

print("Gradients with Sigmoid:", x.grad)

# Reset gradients

x.grad.zero_()

# ReLU activation

relu = nn.ReLU()

output_relu = relu(torch.matmul(x, weights))

output_relu.sum().backward()

print("Gradients with ReLU:", x.grad)Expected Output

Gradients with Sigmoid: tensor([[0.0750, 0.0500]]) Gradients with ReLU: tensor([[0.3000, 0.2000]])

Explanation:

- Sigmoid: The gradients are much smaller because the Sigmoid activation function squashes inputs, leading to reduced gradients during backpropagation.

- ReLU: The gradients remain significant, demonstrating how ReLU allows for better gradient flow and more effective training of deep networks.

Advanced Topics

ReLU Variants

While ReLU is a simple and effective activation function, its limitations have led to the development of various enhanced versions that address specific challenges:

- Leaky ReLU:

Introduces a small negative slope for inputs where

x < 0, ensuring that the gradient remains non-zero and flows during backpropagation. This mitigates the dying ReLU problem while retaining most of ReLU’s simplicity and efficiency. - PReLU (Parametric ReLU):

A generalization of Leaky ReLU, where the slope for negative inputs is learned during training. This makes PReLU adaptable to the dataset and network architecture, offering more flexibility than fixed-parameter variants.

- ELU (Exponential Linear Unit):

Smoothens the transition for negative values by using an exponential curve, which helps reduce bias shifts and achieve faster convergence. ELU outputs are also closer to zero mean, improving training dynamics.

Gradient-Based Improvements

Advanced activation functions aim to address gradient-related challenges, such as vanishing and exploding gradients, while improving learning dynamics:

- Swish:

A novel activation function defined as

f(x) = x * sigmoid(x). Swish combines linear and non-linear properties, offering smooth gradients and better optimization in deep networks. It is particularly useful for tasks involving very deep architectures. - GELU (Gaussian Error Linear Unit):

Provides a smoother and more natural transition compared to ReLU by using a Gaussian cumulative distribution function. This allows for better representation learning and works well in transformer-based models like BERT.

ReLU in Convolutional Networks

ReLU plays a crucial role in convolutional neural networks (CNNs) by introducing non-linearity, which is essential for learning complex patterns in data. It is applied after convolutional layers to preserve spatial structure while transforming feature maps.

- ReLU6:

A variant of ReLU that clamps outputs between

0and6. ReLU6 is commonly used in mobile networks like MobileNet to improve numerical stability and optimize performance in resource-constrained environments. - Benefits in CNNs:

- Maintains sparse activation, reducing computation.

- Works effectively with techniques like batch normalization to stabilize training.

- Preserves spatial features in feature maps when used after convolutional layers.

💡Key Takeaway:

The versatility and effectiveness of ReLU and its variants make them indispensable in modern neural networks. By understanding the strengths and limitations of each variant, practitioners can select the most suitable activation function for their specific task or architecture.

Summary

ReLU (Rectified Linear Unit) is a fundamental activation function in deep learning, valued for its simplicity and effectiveness in preventing vanishing gradients. While powerful, it faces challenges like the dying ReLU problem, which variants like Leaky ReLU address.

Success with ReLU depends on proper implementation: use He initialization, batch normalization, and appropriate learning rates. Monitor activations during training and consider alternatives like Swish or GELU for specific use cases.

Congratulations on reading to the end of this tutorial!

For further reading, please go through the links provided in the section below.

Further Reading

-

PyTorch Documentation: torch.nn.ReLU

Official documentation for the

torch.nn.ReLUmodule, detailing its usage, parameters, and examples. -

PyTorch Documentation: torch.nn.functional.relu

Details on the functional interface for ReLU, providing flexibility for standalone usage.

-

Wikipedia: Rectifier (Neural Networks)

A comprehensive overview of ReLU and its variants, covering mathematical definitions and historical context.

- The Research Scientist Pod Deep Learning Frameworks Page

Have fun and happy researching!

Suf is a senior advisor in data science with deep expertise in Natural Language Processing, Complex Networks, and Anomaly Detection. Formerly a postdoctoral research fellow, he applied advanced physics techniques to tackle real-world, data-heavy industry challenges. Before that, he was a particle physicist at the ATLAS Experiment of the Large Hadron Collider. Now, he’s focused on bringing more fun and curiosity to the world of science and research online.